Beyond the Pixels, from Deep Learning to LLMs in the OCR Arena

In the world of Optical Character Recognition (OCR), Convolutional Neural Networks (CNNs) gave machines the gift of sight—allowing them to detect and decode text from street signs, product labels, and even chaotic, handwritten notes. But OCR is evolving fast. The rise of Large Language Models (LLMs) has introduced a new kind of intelligence—systems that don’t just see text, but actually understand it.

As part of my Deep Learning Bootcamp, I built a web-based MVP to pit two generations of AI against each other. One is a laser-focused vision model: EasyOCR. The other is the versatile and intelligent GPT-4o, a multimodal powerhouse. Let’s dive into the results and explore what happens when brute-force vision meets contextual reasoning.

🛠️ The MVP: Your Personal OCR Showdown

The idea was simple—yet exciting: build an interactive app where users could upload any image and watch two cutting-edge models go head-to-head at extracting text.

The MVP workflow:

- Users upload an image

- It’s processed in real-time by EasyOCR and GPT-4o

- Results are displayed side-by-side

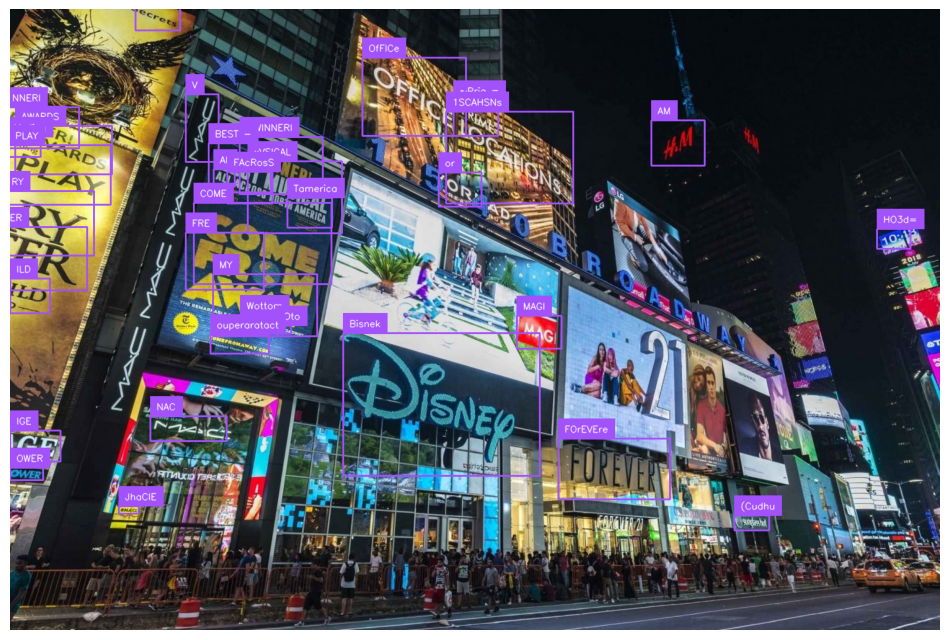

EasyOCR output:

GPT-4o output:

🤼 The Contenders: Specialized Vision vs. Contextual Intelligence

These models come from different corners of the deep learning universe. EasyOCR is a purpose-built tool designed solely for visual text detection, while GPT-4o is a generalist—trained to understand and reason with text and images together.

🟢 The Specialist: EasyOCR

EasyOCR is a dedicated OCR engine built for speed and accuracy. Its architecture is a two-step process:

- Detection – using CRAFT (Character Region Awareness for Text), it scans the image for regions that likely contain text.

- Recognition – a hybrid LSTM (Long Short-Term Memory) model with attention reads the characters from each detected region.

import easyocr

import cv2

image_path = 'street_image.jpg'

reader = easyocr.Reader(['en'], gpu=True)

results = reader.readtext(image_path)

for (bbox, text, confidence) in results:

print(f"Text: {text}, Confidence: {confidence:.2f}")

✅ EasyOCR Superpowers:

- Speed & Efficiency: Blazing fast, especially for batch processing

- Precision: Returns accurate bounding boxes, crucial for forms or documents

- Offline-Friendly: Runs locally, no internet required

⚠️ Limitations:

- No Context Awareness: It reads letters, not meaning. A smudged “W” might stay wrong forever.

- Weak on Complex Inputs: Struggles with stylized fonts, poor image quality, or abstract layouts

🔵 GPT-4o with Vision

GPT-4o is not an OCR model in the traditional sense—it’s a multimodal LLM trained on massive datasets of both text and images. When you show it an image, it uses a visual encoder to “see” and a language model to understand what it’s looking at. In this case, bounding boxes are included in the output image for reference only.

from openai import OpenAI

client = OpenAI(api_key="")

response = client.chat.completions.create(

model="gpt-4o",

messages=[

{

"role": "user",

"content": [

{"type": "text", "text": "Extract all the text from this image. Preserve the order and formatting as much as possible."},

{

"type": "image_url",

"image_url": {

"url": "https://c8.alamy.com/comp/R5AFR5/new-york-city-advertising.jpg",

},

},

],

}

],

max_tokens=300,

)

print(response.choices[0].message.content)

✅ Why GPT-4o is Brilliant:

- Context-Aware Output: It doesn’t just read text—it interprets it. Lists, headers, jokes, even sarcasm? No problem.

- Flexible Formatting: You can ask for JSON, markdown, or paragraph text.

- Handles Messy Inputs: Blurry, rotated, or chaotic layouts? It reads between the lines—literally.

⚠️ Challenges:

- Heavyweight Model: Requires API access to cloud infrastructure (OpenAI), which adds cost and latency

- No Bounding Boxes: Great for text understanding, but not ideal for precise document layout analysis

🏁 The OCR Race: Extraction vs. Understanding

LLMs are changing the game. OCR is no longer just about finding text—it’s about understanding language, layout, and intent—all at once. Whether you’re building a document parser, a business automation tool, or even a meme decoder, LLMs like GPT-4o unlock possibilities that were out of reach just a few years ago.

The journey from clustering pixels to grasping meaning has been nothing short of amazing.

Note: The live web app is no longer running, but you’ll find classic example outputs above to illustrate the showdown!